In just a few weeks, the whole landscape of cold fusion and LENR has changed significantly and, as many have noted, 2015 might bring a breakthrough for LENR in general, with increased public awareness, scientific acceptance and maybe even commercial applications. This is great news. (from http://animpossibleinvention.com )

Most important is the apparent replication of the E-Cat phenomenon by the Russian scientist Alexander Parkhomov. On December 25, 2014, Parkhomov, a respected and experienced physicist, published a short report [1] on an experiment where he had used a reactor similar to the one used by the Swedish-Italian group in the Lugano experiment with Rossi’s E-Cat, and with similar materials in the fuel.

Parkhomov reported significant excess heat from a very small amount of fuel, just like in like other LENR experiments, and the amount of released energy was in the range of kilowatts just like with Rossi’s devices, which sets them apart from most other LENR experiments. Although the report was more of research notes than a scientific paper, the method was so simple and straight forward that it was quite convincing. Obviously it was also important that Parkhomov had performed his experiment without any contact with Rossi or the experimenters at Lugano.A review of Parkomov’s report is made by long time LENR researcher Michael McKubre in the magazine Infinite Energy. Meanwhile Parkhomov has held two seminars in Russia on his findings, and he has released a second, updated report.

Parkhomov’s report has inspired other groups to attempt a similar replication of the E-Cat effect. Martin Fleischmann Memorial Project had already planned a similar experiment, and the group is now ready to start this work, with support from Parkhomov.

Renowned LENR researcher Brian Ahern has also plans for a similar experiment.

It’s also known that the Swedish-Italian group that performed the Lugano experiment is working on continued investigations of the effect.

Apart from these, there are most probably many others who are trying the same thing without giving notice.

Apparently the interest is great all over the world. The increased interest has also been reflected in more media reports than before. One of them is a recent piece in Wired UK, noting that “if Parkhomov’s work can be copied, the Chinese may not need a licence.”

Some useful knowledge of this kind might come out of the collaboration between MFMP and the Italian researcher Francesco Piantelli, who used to work together with late Prof. Sergio Focardi before Focardi started to help Rossi.

MFMP went to see Piantelli in his lab in Tuscany, Italy, in January 2015. MFMP had a good contact with Piantelli, learning a lot from his long experience of LENR systems with nickel and hydrogen, which are different from the kind of system Rossi, even though the main elements are the same.

It’s a good thing that MFMP sticks to the idea of open science, publishing results and experiments in real time, and that the members have declared that they will never sign any kind of NDA. In this way, there’s good hope for new knowledge being communicated to other interested researchers, and that the this knowledge might grow significantly over time.All in all, things are starting to move, and they might move very fast now. On the other hand it seems that we will not get much information from Rossi and his industrial partner Industrial Heat during 2015.

Rossi still claims that he and IH are working with a 1 megawatt plant installed at the premises of a customer on commercial terms, but that they will not be ready to show the working plant until it has been running for a year.

There’s no way to confirm this.

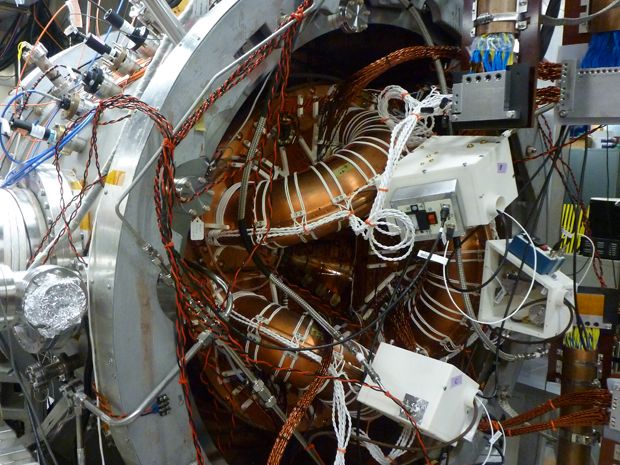

According to Sutherland, the big breakthrough was UW’s experimental discovery in 2012 of a physical mechanism called imposed-dynamo current drive (hence “dynomak”). By injecting current directly into the plasma, imposed-dynamo current drive lets the system control the helical fields that keep the plasma confined. The result is that you can reach steady-state fusion in a relatively small and inexpensive reactor. “We are able to drive plasma current more efficiently than previously possible,” says Sutherland. “With that efficiency can come higher current and a more compact, economical design.”

According to Sutherland, the big breakthrough was UW’s experimental discovery in 2012 of a physical mechanism called imposed-dynamo current drive (hence “dynomak”). By injecting current directly into the plasma, imposed-dynamo current drive lets the system control the helical fields that keep the plasma confined. The result is that you can reach steady-state fusion in a relatively small and inexpensive reactor. “We are able to drive plasma current more efficiently than previously possible,” says Sutherland. “With that efficiency can come higher current and a more compact, economical design.”